The GPT-3 AI that blogs: What machines can do as well as people

What would some authors give to have introductions write themselves or automatically generate statistics and infographics? Unless you go to the trouble of composing and compiling these elements, the article will be left with a lot of gaping holes. However, the work is boring and uncreative, so that is why many writers choose to put off doing it.

GPT-3 will be able to help out with these tasks in the future. It is the third language model powered by artificial intelligence from developer OpenAI based in San Francisco, and it has caused a sensation with how well it can fill in the gaps. It is so good that it released a wave of hype and hysteria in mid-2020. The fear is that GPT-3 will soon steal away the jobs of designers and authors.

The hype is understandable, since OpenAI represents an evolutionary leap forward. Has the Word assistant, the talking paper clip, been building its own website recently? Not that much has changed since Windows 98. One thing is clear: we are still far from the point when machines will surpass us.

However, we are already interested in how language service providers can use the new technology productively, and we are therefore tracking its continued development. GPT-3 may also put some wind into the sails of such CAT applications as Across or SDL Trados Studio, machine translation post-editing, and even pure machine translations.

The abbreviation stands for "Generative Pre-Trained Transformer," and the "3" stands for the fact that the model is in its third version. The transformer

- generates output

- based on previous training with a flexible deep learning language model,

- which is based on Google's Transformer architecture.

The story

In mid-2020, an article written by a blogging machine was upvoted to the top of the rankings on Hacker News. The headline read: "Feeling unproductive? Maybe you should stop overthinking".

The college student Liam Porr, who was behind the experiment, described what he did on his personal blog: He gave GPT-3 so-called short prompts, and the intelligent language model wrote the rest. Only a few visitors guessed that the author was a machine, but most readers of the self-help and productivity blog upvoted it.

Liam Porr drew a lesson and a vision of the future from this experience:

GPT-3 can compose wonderfully beautiful prose that is especially outstanding for a computer. But, according to Porr, when it comes to logic and rational thinking, the language model is much weaker. It is unsuitable for fact-based writing because, if it is not fact-checked, the machine will generate arbitrary sentences that only appear to be coherent on the surface. If unsuspecting readers come in contact with the content, it could lead to fatal consequences.

Porr thinks it is a realistic expectation that GPT-3 could save employees time by automating typing tasks wherever possible.

While one distraught blog commenter has expressed fears that the language generator could destroy the bond between author and reader and thus ruin the medium, author Rionaldi Chandraseta suggests that we need to reconsider our quality standards.

Because even if a blog written by GPT-3 is able to garner a lot of initial attention, the quality still leaves a lot to be desired. Articles on self-help topics are often full of meaningless banter, which is why a blogging robot can masquerade as a writer in this category without being noticed. But the AI powerhouse simply cannot keep up with thoroughly researched compositions on complex topics that flow from the pen of a real author.

GPT-3 has a lot more potential, however. But how can it be unlocked?

How the AI behind GPT-3 works

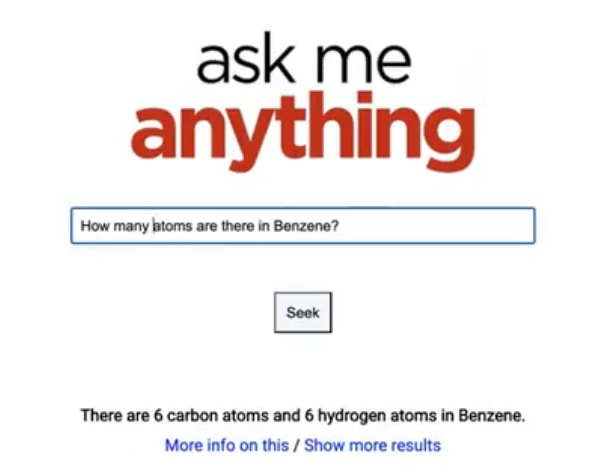

What Autocomplete can do on a small scale, GPT-3 does on a much larger one. This is what makes it the Great Gatsby of chatbots. You simply give it a few words or sentences, and the language AI spits out a few pages of text based on just a few default settings.

The software is made up of a neural network comprising 175 billion parameters. The same principle applies to AI development: the more, the better. More data, including from a greater variety of sources, allow for more suitable responses to prompts.

After all, the machines never know anything about what they are saying. As far as they can tell, real news is just as true as fictional stories, and the actor Johnny Depp is just as real to it as Captain Jack Sparrow, the role he portrays in Pirates of the Caribbean.

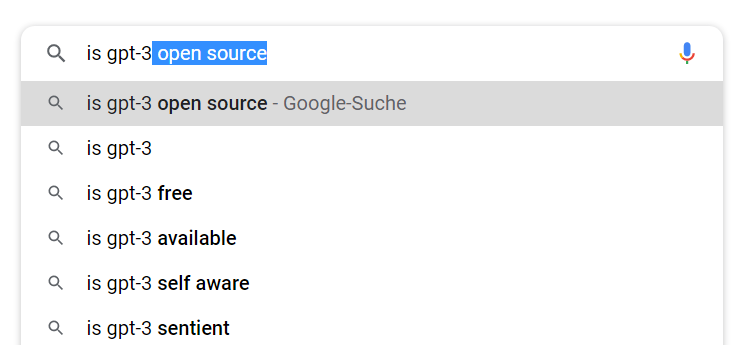

However, these machines have been fed the entirety of Wikipedia, social media feeds, and reddit threads. Their answers sound amazingly human.

Yet computer scientist Yonatan Bisk is not impressed: "And nobody is surprised that if you memorize more, you can do more."

What the transformer can do in a nutshell:

- Generate a huge amount of text in just a few seconds

- Code programs on command, even though this falls outside of its original programming

This is where pre-training pays off. GPT-3 understands language commands that are entered as text and is proficient in programming languages.

This makes the language model a generalist, a true jack-of-all-trades, which does not have to be taught everything individually.

It is more adept at:

- Coding

- Completing sentences

- Laying out images and designs

- Solving simple logic tasks, such as drawing analogies.

GPT-3 could even use its own code and create language model software “in its own image” (to highlight its omnipotence over all AIs).

Popular uses of the technology have already emerged for which GPT-3 has not been specifically programmed or trained. Interested parties with developer access to the prototype version can train it for specific applications, including as a chatbot, web design machine, program assistant, or intelligent search engine.

All the user needs to do is provide a prompt.

Start typing a prompt of the type "Chocolate cakes are...", and the transformer looks for the most common terms in its neural network that are used to describe chocolate cake and then spits out whole sentences and paragraphs. Due to the immense amount of data in its memory, the text remains largely coherent.

At least until the most frequent terms it finds deviate from the topic of the composition, resulting in the content of the text becoming more and more arbitrary and thus, at a certain point, plain factually wrong.

Or just try to give it an assignment like: "Write an essay about the development of the American colonies that became the US, and compile statistics about the population growth for all the colonies up to the election of the first US president."

Relying on all of the Wiki facts that it ingested, GPT-3 is not only able to write an essay, but it also generates a formatted table with the colonies and their population figures by year.

Philosophical wisdom can also be wrested from the language model by using a prompt like: "Dear human philosophers, I read your comments on my abilities and limitations with great interest." The full answer revolving around lying robots is surprisingly honest, making for an entertaining and worthwhile read.

GPT-3 still doesn't know what it's talking about, but it managed to generate the essay in just half a minute. Of course, there is also utter nonsense in it, because it uncontrollably combines very common terms into sentences, which the machine openly admits to doing in several paragraphs.

Often it works well, but it is just as often completely wrong. GPT-3 has not yet graduated from high school. Instead, the developers have placed their super-transformers under house arrest, and visits are only permitted under their strict supervision. Furthermore, GPT-3 is only available to entrepreneurs who apply to OpenAI with ethically sound ideas. The fear that the technology will be manipulated and abused is simply too great.

What tasks the language generator is capable of handling

The language model can, for example, be used to write a co-editorial. The Guardian commented that they could edit the output to a publishable state faster than a human-produced text.

It also works well with a few useful, small layout and search engine scripts: It is simply a jack-of-all-trades, or rather the first general purpose artificial intelligence.

The potential of GPT-3 truly transcends media type: Computers are able to simulate any desired format and media type, because they are able to process them in code form without regard to what they actually represent. In other words, books, videos, and radio streams are all code. The fact that the machine cannot distinguish any differences between these formats is in fact an advantage that future versions of the language model may be able to further exploit.

And it has been a long-cherished dream to be able to instruct machines in natural language (“no code”) to perform complex tasks. Very soon, GPT-3 could ramp up the previously rather limited capabilities of language assistants.

All sorts of things can be achieved with a general-purpose AI. When fed pixel sequences, it can also complete images. And thanks to the Jukebox software, OpenAI can also generate music with a click.

There are still some areas where the deep learning of GPT-3 falls short

The dream of every marketer: generate texts that require minimal editing in no time that make readers' hearts go pitter-patter. Can GPT-3 do that? The Guardian also tinkered around, and it came up with a result that it subsequently printed.

OMR also tested it out. The verdict is mixed, but optimistic: On the one hand, a lot of generated texts are judgmental and pointless, so they are of no use to marketers. The language generator also does not take facts very seriously. It generalizes. In doing so, it foments prejudices, invents false information, and produces shameless advertising.

Some of its autocompletions do not make sense. A short chat with the OMR journalist produced similar results. He asked the bot for its name, but GPT-3 wasn't able to answer. Fifty-five years after Eliza, chatbots still stumble over small talk. It turns out that people are even more complicated than machines.

On the other hand, GPT-3 can handle a few types of text surprisingly well. With just a little bit of tweaking, the bot was able to come up with a LinkedIn post, an Amazon-product page, and an About Us page that were all presentable.

The transformer still needs a full-time babysitter to double-check the freestyle poetry and fix a few grammatical errors. The KI-Pedia on Lernen-wie-maschinen.ai provides a nice summary of the downsides.

Fear of abuse: Fake news, spam, and clickbaiting

Liam Porr found it scary easy to top the Hacker News ranking using GPT-3. The fear of the developers that the technology could be manipulated and abused thus proved to be justified. They fear

- the targeted production and dissemination of false information: the AI could generate several hundred fake news articles in just a few minutes, which could be disseminated online.

- fully automated keyword spamming: GPT-3 could blindside search engines using the same procedure by flooding the web with articles with weak content that on the basis of keyword prompts.

Porr believes that the technology can also facilitate clickbaiting: the transformer can produce texts of a certain length about simple but popular topics. This can be used to generate tons of substandard articles that can be used to flood the Internet. This type of abuse could undermine the reputation of online content and increase pressure on search engines and content curators to sift through the daily deluge of information.

Set an example for robots – Calibrating the Internet against prejudices

By stitching together pieces of text from its source libraries, the language generator can reproduce all kinds of stereotypes and prejudices. The texts turn out to be more discriminatory and racist than average, since false information found in random places in the training set only makes things worse.

The reason for this is the few-shot learning, which is preliminary training based on just a few initial examples. It is frequently the case that OpenAI algorithms can produce amazingly accurate and varied texts on the basis of just a few templates. However, any tendency towards stereotypes and prejudices that appear in the original data is amplified.

This cannot be compensated for without compromising the effectiveness of the AI. Because if you restrict the generation by imposing prohibitions, this will generally limit your productivity.

A text generator without a consciousness, a mouth without a brain

As already mentioned, the transformer, as a text producer that lacks a consciousness, will generate sentences with any sort of content. It cannot prevent misrepresentations, because it has no content-related ability to proofread itself. The never-ending calculation of the next word based on mere probability carries the immense risk of producing misinformation.

This type of text creation based on prefabricated patterns leads to content repetitions. Based on the rules of probability, they are only logical, but they will raise the human reader's hackles.

Machines without human overseers

Without continuous monitoring and post-processing, the results often leave a lot to be desired. As the length of the text increases, the results lose their coherence, and the number of randomly generated, false assertions increases.

Writing texts oneself – for the sake of the environment

And then there is the fact that GPT-3 is also an environmental polluter. The task of feeding huge databases and creating texts in real time consumes an enormous amount of energy, which is why environmentally conscious companies should shy away from intensively using the technology.

In times when environmentally sustainable and responsible activity is increasingly becoming a non-optional task for the global economic community, the chatter-bot needs an energy-saving button for its never-ending flow of text.

GPT-3 could already operate an Amazon store, where it could provide its colleagues in the machine translation section with text for their product listings.

Areas where AI language models can really help us out

How justified is the fear that machines will soon replace us, and how real is the threat posed by the machines? The transformer has already rattled the nerves of copywriters and designers, but it is not a terminator.

The bottom line is that these initial attempts are nowhere near the threatening premonitions of the end times that some fear. The AI simply can't do enough by itself, especially not without meaningful prompts and critical examination.

With a view to the enhancements that inventors have already come up with, one prognosis is obvious: The successors of the GPT-3 could be great assistants for software development, teaching, and understanding texts at all language levels.

The next few years promise unlimited possibilities for new AI assistants in the home, but not unlimited opportunities for completely replacing people in their jobs.

The healthcare sector, for example, is drowned in a daily flood of new studies. The language model has already proven its ability to summarize complex amounts of text, including even in simplified language, if desired. An app powered by GPT-3 could present overwhelmed researchers with the key findings of all publications of the day in a single page.

Writers also talk shop about what role the text generator could play in their writing. Joanna Penn and Paul Bellows hope that their podcast will explain to listeners how the technology can make work easier and boost creativity. However, they limit their claims for the technology to stating the AI will boost creativity, but not replace it. After all, Bellows is able to write twice as many words per day thanks to the AI, and he also hasn't been troubled by writer's block ever since.

GPT-3 will sometimes produce something useful and sometimes nonsense in response to a spicy prompt. So human writers have not put down their pens yet. Bearable: The first novels from the likes of C.D. Blank and G.P. Transformer are still a long time off. However, it is quite possible that AI can save a manuscript from sitting in the drawer and gathering dust.

The benefits of GPT-3 for machine translation as well as post-edited machine translation and specialized translation by a human translator

Thanks to their high speed and high working capacity, computers are still attractive tools for tasks that can be automated. The transformer has really injured our pride as copywriters. Without question, we are keeping a close eye on its continued development. If one day it proves to be suitable for professional translation, we would be happy to add it to our toolkit.